8 Financial Modeling Best Practices for Executive Decision-Making

Brian's Banking Blog

In a banking environment defined by compressed margins and mounting regulatory scrutiny, the quality of your financial models directly dictates the quality of your strategic decisions. A well-constructed model is not merely a forecasting tool; it is a dynamic asset for stress testing capital adequacy, evaluating M&A opportunities, and optimizing your balance sheet. Conversely, a poorly designed model, riddled with inconsistent formulas or undocumented assumptions, introduces significant operational risk and can lead to flawed, value-destructive conclusions.

This guide provides a definitive framework of eight financial modeling best practices essential for banking executives. We will dissect the non-negotiable principles that transform a simple spreadsheet into a powerful decision-making engine. From structuring models for intuitive clarity to implementing robust error checks and version control, each practice is designed to enhance accuracy, transparency, and strategic value. Following these standards is not just about technical proficiency; it's a critical component of institutional governance and a prerequisite for creating financial projections that impress investors and satisfy regulators.

For executives and directors, mastering these concepts means you can confidently challenge assumptions, interpret outputs, and steer your institution with greater precision. For analysts, it provides a blueprint for building models that are not only accurate but also auditable and scalable. We will illustrate how embedding these practices, supported by granular data intelligence from platforms like Visbanking, enables your team to move from reactive analysis to proactive, data-driven strategy. The following sections offer a clear, actionable framework for elevating your institution's modeling capabilities.

1. Model Structure and Flow

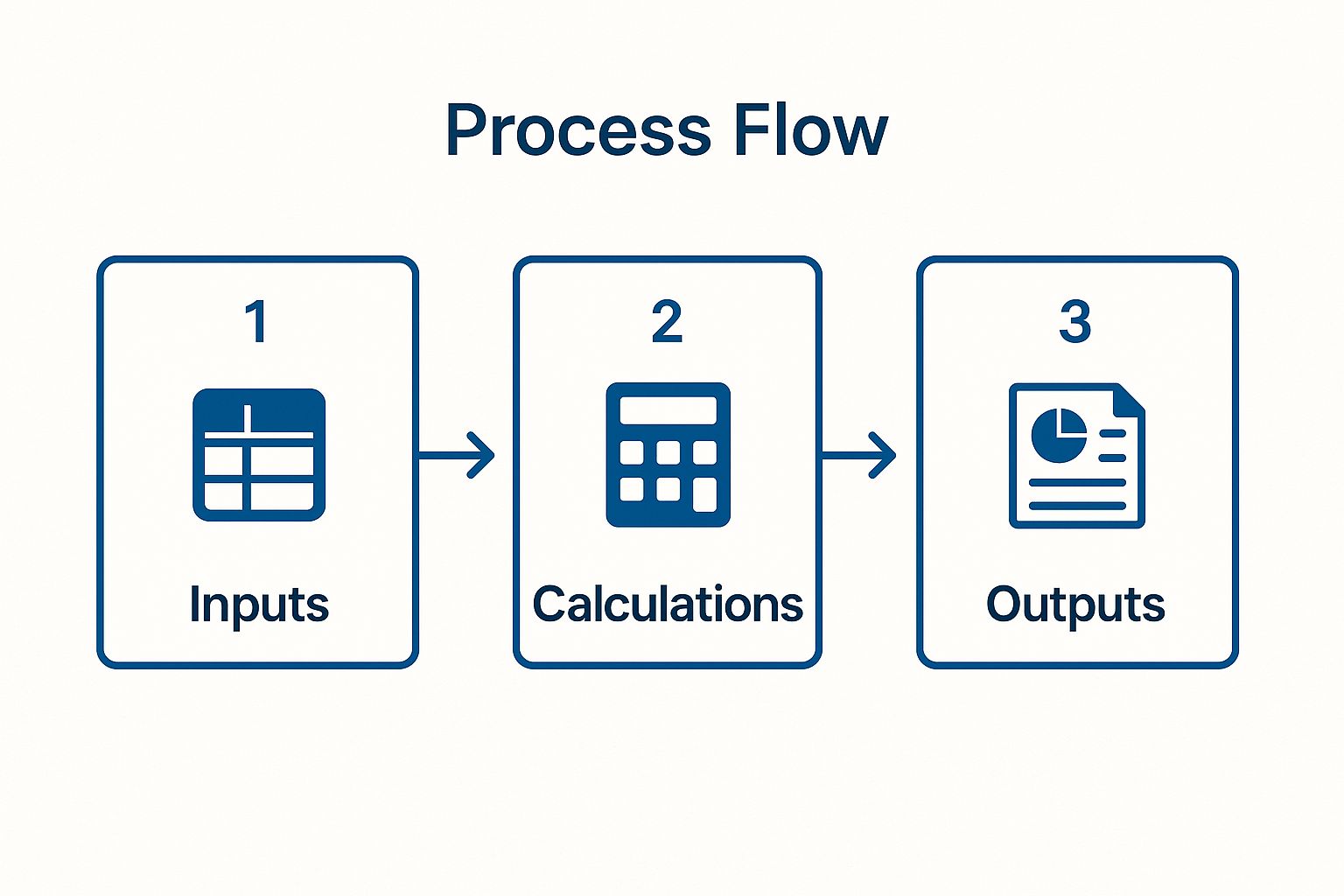

A hallmark of exceptional financial modeling best practices is a disciplined and logical structure. A well-structured model serves as a clear roadmap, guiding users from initial assumptions to final conclusions without ambiguity. This fundamental principle dictates that a model should flow intuitively, typically from left to right and top to bottom across worksheets, with a distinct separation between inputs, calculations, and outputs.

This organization is not merely for aesthetic purposes; it is a critical risk management tool. When a model is built logically, it becomes significantly easier to audit, debug, and hand over to colleagues or regulators. For banking executives, a transparent structure ensures that the underlying assumptions driving a key decision, such as a credit extension or M&A valuation, are easily identifiable and defensible. An unstructured, chaotic model introduces unacceptable operational risk and undermines the credibility of its results.

The Logic of a Structured Model

The core principle is to isolate different components of the model to prevent errors and enhance clarity.

- Inputs/Assumptions: All key drivers and assumptions (e.g., growth rates, interest rates, credit loss provisions) should be consolidated in one dedicated section or worksheet. This allows for quick scenario analysis and sensitivity testing.

- Calculations/Processing: This is the model's engine room where the inputs are processed. Formulas in this section should link directly to the input sheet, never containing hard-coded numbers. This maintains the model's integrity.

- Outputs/Summaries: The final results, charts, and dashboards are presented here. This section should be designed for executive review, presenting key findings like valuation summaries, returns analysis, or covenant compliance dashboards in a clean, digestible format.

To visualize this critical workflow, the following diagram illustrates the ideal progression within a financial model.

This linear progression ensures that any changes to inputs flow consistently through the calculations to update the final outputs, maintaining transparency and reliability. By adhering to this structure, analysts can build models that are not only powerful but also auditable and trustworthy, enabling senior leaders to make high-stakes decisions with greater confidence.

2. Input Centralization and Assumption Management

A cornerstone of reliable financial modeling best practices is the strict centralization of all inputs and assumptions. This discipline involves consolidating every key driver of the model into a single, dedicated worksheet or section. Instead of burying variables like growth rates or credit default assumptions deep within complex formulas, they are placed in a transparent, accessible location. All subsequent calculations throughout the model must then link directly to this input hub, eliminating hard-coded numbers entirely.

For bank executives, this practice is non-negotiable. It transforms a complex model from a "black box" into an auditable and dynamic decision-making tool. When evaluating a commercial real estate loan, for instance, a centralized input sheet allows a credit committee to instantly stress-test the impact of a 50-basis-point increase in cap rates or a 10% decline in projected lease income. This level of agility and transparency is crucial for managing risk and making informed capital allocation decisions under pressure.

The Logic of a Centralized Input Sheet

Centralizing assumptions mitigates the risk of hidden errors and enhances the model's strategic value by making scenario analysis straightforward. This approach hinges on several key components:

- Categorization: Group related inputs logically. For an M&A model, this means separating deal assumptions (e.g., purchase price, synergy targets) from target company assumptions (e.g., revenue growth, margins) and macroeconomic drivers (e.g., Fed Funds rate).

- Source Documentation: Every critical assumption should be documented with its source and the date it was last updated. An assumption for a loan portfolio's net charge-off rate, for example, should be traceable to either historical performance or benchmark data from a platform like Visbanking, creating a clear audit trail essential for internal governance and regulatory scrutiny. Effective input management is a core component of a sound data governance in banking.

- Controls and Validation: Implement controls like data validation dropdowns for categorical inputs (e.g., "High/Medium/Low" growth scenarios) and set reasonable minimum/maximum ranges for key numeric inputs. This prevents simple data entry errors from corrupting the model’s output.

By enforcing this disciplined separation of inputs, analysts build robust, flexible models. This empowers senior leaders to quickly understand a model's core drivers and confidently challenge assumptions, leading to more rigorous and defensible financial decisions.

3. Formula Consistency and Simplicity

A foundational element of reliable financial modeling best practices is the disciplined use of consistent and simple formulas. A model riddled with overly complex, inconsistent, or "black box" calculations is a significant source of operational risk. Simple, transparent formulas ensure that the model is not only accurate but also easily auditable, understandable, and transferable to other team members or regulators.

For banking professionals, this principle is non-negotiable. When evaluating a commercial loan application or modeling the impact of interest rate changes on net interest margin, the logic must be transparent. A complex, nested formula that combines multiple steps into one cell might seem efficient, but it obscures the underlying logic, making it difficult to debug or validate. A model’s integrity is directly tied to the clarity of its calculations; simplicity fosters trust in the outputs.

The Logic of Simple and Consistent Formulas

The core principle is to break down complex calculations into logical, sequential steps, ensuring uniformity across the model.

- Break Down Complexity: Instead of a single, monolithic formula to calculate a metric like Free Cash Flow, each component (EBIT, Taxes, D&A, CapEx, Change in NWC) should be calculated on its own line. This step-by-step approach makes the logic explicit and easy to follow.

- Maintain Uniformity: If you calculate a loan loss provision for one loan portfolio, the exact same formula structure should be applied to all other portfolios. This consistency is crucial; for example, if you drag a formula across a row of time periods, it should function identically in each column without modification.

- Isolate Key Assumptions: Never hard-code an assumption like a 3.5% loan growth rate directly into a formula. Instead, link to a dedicated input cell. This practice, a cornerstone of effective financial modeling best practices, allows for effortless scenario and sensitivity analysis without having to hunt for embedded numbers.

Consider the calculation of a bank’s efficiency ratio. A poor model might use a single, unwieldy formula: =SUM(A1:A50)/(SUM(B1:B20)+SUM(C1:C10)). A superior approach breaks this down:

- Calculate Total Noninterest Expense.

- Calculate Net Interest Income.

- Calculate Total Noninterest Income.

- Finally, calculate Efficiency Ratio = Noninterest Expense / (Net Interest Income + Noninterest Income).

This transparent method not only reduces the chance of errors but also provides a clear audit trail. It allows senior leaders to question and understand each component of the calculation, ensuring that strategic decisions are based on a verifiable and robust analytical foundation.

4. Error Checking and Model Validation

A financial model, no matter how complex, is only as reliable as its accuracy. Robust error checking and model validation are not optional final steps; they are indispensable components of financial modeling best practices, integrated throughout the build process. For banking professionals, a model without built-in integrity checks is a source of significant operational and reputational risk, potentially leading to flawed credit decisions, mispriced assets, or inaccurate capital adequacy forecasts.

This disciplined practice involves creating a systematic framework to identify and flag inconsistencies automatically. It transforms the model from a static calculator into a dynamic, self-auditing tool. An effective validation system provides senior leadership with the assurance that decisions are based on arithmetically sound and logically consistent analysis, a non-negotiable requirement when presenting to a board, audit committee, or regulatory body.

The Mechanics of Model Integrity

The core principle is to build a network of internal controls that validate the model's logic and calculations in real time. These checks ensure that fundamental accounting and financial principles are never violated.

- Balance Checks: The most fundamental check, ensuring the balance sheet always balances (Assets = Liabilities + Equity) on every time-series period. Any deviation, no matter how small, must trigger an immediate and highly visible error flag. For example, a cell displaying "BALANCES" or "ERROR: $5.01" provides an instant status.

- Cash Flow Reconciliation: A check to confirm that the beginning cash balance, plus the net change in cash from the cash flow statement, correctly equals the ending cash balance on the balance sheet. This validates the linkage between the three primary financial statements.

- Logical Flags: Implementing checks for logical impossibilities, such as negative cash balances, debt levels exceeding pre-defined covenants, or regulatory capital ratios (like Tier 1 Capital) falling below minimum thresholds.

- Error Dashboard: A centralized summary worksheet that aggregates all error checks from across the model. A single "Model OK" or "ERROR" cell on this dashboard provides an at-a-glance confirmation of the model's integrity.

These automated controls act as a first line of defense, preventing simple human errors from escalating into material misstatements. For instance, a model projecting a bank's capital adequacy can use conditional formatting to highlight in red any period where the CET1 ratio falls below the 4.5% regulatory minimum, providing an instant visual alert.

By embedding these validation mechanisms, analysts build models that are not just powerful but also resilient and defensible. This proactive approach to quality control ensures that the outputs guiding strategic banking decisions are trustworthy, allowing executives to act with greater certainty and control.

5. Documentation and Model Transparency

A financial model without clear documentation is a black box—an unacceptable risk in the highly regulated banking environment. Adhering to the best practices of thorough documentation and model transparency transforms a complex spreadsheet into a reliable, auditable asset. This principle ensures that a model's purpose, methodology, assumptions, and limitations are explicitly recorded, allowing others to understand, maintain, and correctly use it long after its original creator has moved on.

For banking leaders, this is a cornerstone of effective governance and risk management. When a regulator questions the assumptions in a capital stress test or an investment committee challenges a valuation, comprehensive documentation provides a defensible record of the model’s logic. It eliminates ambiguity and ensures continuity, preventing the loss of institutional knowledge and reducing the operational risk associated with key-person dependency. A transparent model is a trustworthy model, reinforcing the credibility of the decisions it supports.

The Logic of a Transparent Model

The primary goal is to create a complete and accessible record of the model’s construction and intended use, effectively building a user manual alongside the model itself.

- Assumption & Methodology Log: A dedicated section or document should detail every key assumption (e.g., credit loss forecasts, deposit growth rates, non-interest income drivers). It must explain the rationale, data source, and date for each input, providing a clear audit trail.

- User Guide & Instructions: Clear instructions on how to operate the model are essential. This includes guidance on running scenarios, updating inputs, and interpreting outputs, ensuring users can leverage the model without inadvertently corrupting its logic.

- Version Control: A robust version control log is non-negotiable. It must track all changes, detailing who made the change, when it was made, and why. This is critical for regulatory submissions and internal audits, demonstrating a disciplined change management process.

For instance, a model assessing a $50 million credit facility for a commercial developer must include a detailed log explaining the assumptions for lease-up rates, tenant improvement costs, and the exit cap rate. The source for that cap rate—whether an appraiser’s report or market data—must be explicitly cited. Similarly, a bank’s ALM model requires an exhaustive document submitted to regulators that outlines the methodology for interest rate shock scenarios and deposit beta assumptions. This level of transparency is not optional; it is a fundamental requirement for sound risk management and strategic planning.

6. Scenario and Sensitivity Analysis

A static financial model, regardless of its precision, provides only a single-point estimate of the future. A cornerstone of superior financial modeling best practices is the integration of dynamic scenario and sensitivity analysis. This practice transforms a model from a simple calculator into a strategic tool, allowing decision-makers to understand the full spectrum of potential outcomes by testing the impact of key variables. For banking leaders, this is not just an academic exercise; it's a critical component of risk management and strategic planning.

By building robust scenario capabilities, an analyst can quantify the potential impact of changing market conditions, competitive actions, or internal performance on a loan's serviceability or an acquisition's value. This proactive approach moves beyond a base-case forecast and prepares the institution for both upside opportunities and downside risks. A model that can instantly show the effect of a 100-basis-point interest rate hike or a 15% decline in commercial real estate values is invaluable for making resilient, informed decisions.

Building a Dynamic Decision Framework

The objective is to create a model that clearly demonstrates how outputs change when key assumptions are flexed. This requires a disciplined approach to identify and isolate the most critical drivers of value and risk.

- Scenario Analysis: This involves creating distinct, plausible versions of the future. For example, in an M&A model, analysts might build "Base Case," "Upside Case" (with aggressive synergy realization and a 10% revenue uplift), and "Downside Case" (with a 50% synergy shortfall and a 5% revenue decline) scenarios. Each scenario modifies a set of predefined assumptions simultaneously.

- Sensitivity Analysis: This technique isolates a single variable to measure its individual impact on a key output. For instance, a tornado chart can visually rank which assumption—like net interest margin or loan loss provisions—has the most significant influence on projected net income.

- Stress Testing: This is a more extreme form of scenario analysis focused on severe but plausible events, a vital practice for regulatory compliance and capital adequacy. Understanding these breaking points is fundamental to institutional resilience. For a deeper dive, you can explore the principles of stress testing for banks on visbanking.com.

By embedding these analytical layers, the model becomes a powerful communication tool. It shifts the conversation from "What is the answer?" to "What are the key drivers and risks we must manage?" This fosters a more sophisticated and risk-aware decision-making culture, enabling executives to act with a clear understanding of the potential range of outcomes.

7. Version Control and Model Governance

Robust version control and model governance are cornerstones of sound financial modeling best practices, transforming a model from a standalone tool into a trusted institutional asset. This discipline involves systematically managing model versions, changes, and access controls to ensure integrity, prevent errors from unauthorized modifications, and maintain a complete audit trail. For any model influencing high-stakes decisions, from regulatory capital calculations to M&A valuations, a lack of formal governance introduces unacceptable risk and undermines its credibility.

In the banking sector, where models underpin critical functions like credit risk assessment and stress testing, this practice is not optional; it is a regulatory and operational necessity. When regulators scrutinize a bank's capital adequacy model, a clear, documented history of changes, validations, and approvals is non-negotiable. Effective governance ensures that the model used for a multi-million dollar loan syndication is the correct, validated version, not an outdated copy on an analyst's local drive. This systematic oversight safeguards the bank from costly errors, regulatory penalties, and reputational damage.

The Logic of Model Governance

The core principle is to establish a framework that ensures a model’s accuracy, reliability, and security throughout its lifecycle. This is achieved by creating clear accountability and a transparent history of its evolution.

- Version Control: This involves implementing a clear numbering scheme (e.g., v1.0 for major releases, v1.1 for minor updates) and maintaining a master version in a secure, centralized location. This prevents the proliferation of conflicting or erroneous model copies.

- Change Management: All modifications, from a simple formula tweak to a major assumption overhaul, must be documented. This change log should include who made the change, when it was made, and the business justification for it. For significant models, a formal approval process involving senior stakeholders is essential.

- Access Control: Not everyone should have the ability to edit a critical model. Access rights should be tiered, granting read-only access to most users while restricting editing privileges to a small, authorized group of model developers and owners.

Implementing this structured governance framework is fundamental to effective model risk management. For instance, a bank's ALM (Asset Liability Management) model must undergo rigorous version control. A change to its interest rate sensitivity assumptions (a "minor" update, e.g., v2.3 to v2.4) must be logged and justified, while a change to the core calculation engine (a "major" update, e.g., v2.4 to v3.0) would require independent validation and formal approval from the risk committee. Without this process, the bank's board cannot confidently rely on the model’s outputs to make strategic decisions about its balance sheet. This disciplined approach ensures that every number produced is traceable, defensible, and reliable.

8. Performance Optimization and Scalability

A key differentiator of professional-grade financial modeling best practices is the focus on performance and scalability. An efficient model calculates quickly and handles large datasets without crashing, while a scalable model can grow with business needs. For banking leaders, a model that slows to a crawl during a critical stress test or cannot accommodate a growing loan portfolio is a significant liability, delaying decisions and introducing operational risk.

This principle is about building for the future, not just the present. A model used for assessing a small commercial loan portfolio might work perfectly today, but will it handle a five-fold increase in data points after an acquisition? Ensuring a model is optimized means it remains a reliable and responsive tool as data volumes increase and analytical complexity grows, providing a stable foundation for strategic planning and risk management.

Designing for Speed and Growth

The core principle is to construct the model using efficient techniques that minimize computational load and allow for easy expansion.

- Efficient Formulas: Opting for computationally "cheaper" functions is critical. For instance, using an

INDEX-MATCHcombination is significantly faster thanVLOOKUPon large, unsorted datasets. Likewise,SUMIFSandCOUNTIFSare more efficient than array formulas for conditional aggregations. - Data Management: Avoid referencing entire columns or rows in formulas (e.g.,

A:A). Instead, use dynamic named ranges or structured table references (e.g.,Table1[Sales]) that automatically adjust as data is added. This prevents Excel from checking millions of empty cells. - Calculation Control: For large, complex models, switching to manual calculation mode during development prevents constant recalculations, saving considerable time. The model can be fully recalculated with the F9 key when needed, giving the analyst control over the workflow.

For example, a corporate consolidation model that uses SUMIFS to pull data from multiple subsidiary sheets will outperform one that relies on thousands of volatile INDIRECT functions. Similarly, a portfolio risk model that uses helper columns to pre-calculate intermediate steps will be faster than one with long, nested mega-formulas that are difficult to evaluate and debug.

This foresight in model architecture ensures that analytical tools remain assets rather than bottlenecks. By prioritizing performance from the outset, analysts build robust models that can keep pace with the demands of a dynamic banking environment, enabling leaders to access critical insights without frustrating delays.

Best Practices Comparison Matrix

| Practice | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Model Structure and Flow | Moderate - requires upfront planning | Medium - time for organizing worksheets | Clear, auditable, and easy-to-update models | Investment banking DCF, LBO, corporate budgeting | Easier debugging, faster updates, better collaboration |

| Input Centralization and Assumption Management | Moderate - needs disciplined input control | Medium - careful input validation | Consistent assumptions, simplified scenario analysis | Real estate, SaaS, manufacturing models | Reduces inconsistency, enables sensitivity analysis |

| Formula Consistency and Simplicity | Low to Moderate - simpler formulas but may expand worksheets | Low - focus on formula clarity | Reduced errors, easier audits, faster calculation | All models needing transparency and maintenance | Improved readability, reduced debugging time |

| Error Checking and Model Validation | Moderate to High - involves building checks and validation | Medium to High - added testing steps | Higher model accuracy and reliability | Regulatory, banking, cash flow, and revenue models | Early error detection, increased confidence |

| Documentation and Model Transparency | Moderate - requires detailed write-up and updates | Medium to High - time-intensive | Better knowledge transfer, reduces misuse | Project finance, regulatory submissions, corporate planning | Improved stakeholder trust, easier handoffs |

| Scenario and Sensitivity Analysis | Moderate to High - setup of scenarios and simulations | Medium to High - advanced scenario modeling | Deeper insights into risks and value drivers | M&A, commodity pricing, retail, tech startups | Supports strategic decisions, identifies key risks |

| Version Control and Model Governance | High - needs formal processes and access control | High - governance tools and monitoring | Controlled changes, audit trails, reduced errors | Banking risk, public company budgeting, regulatory models | Prevents unauthorized edits, supports compliance |

| Performance Optimization and Scalability | High - advanced formula and data management | Medium to High - requires expertise | Faster calculations, scalable model performance | Large data models, portfolio management, trading desks | Handles big data, improves user experience |

From Best Practice to Competitive Advantage

We have established the pillars of robust financial analysis: eight foundational financial modeling best practices. From establishing a logical model structure and centralizing assumptions to enforcing formula consistency and rigorous error checking, each principle serves a distinct purpose. Together, they form a cohesive framework for transforming raw data into strategic intelligence.

Mastering documentation for transparency, building dynamic scenario analysis, implementing strict version control, and optimizing for performance are not merely technical exercises. They are executive mandates. These practices directly mitigate operational risk, enhance the credibility of your strategic forecasts, and create a culture of analytical discipline. Adopting this framework ensures that when a board member or regulator questions an assumption, your team can provide a clear, defensible, and transparent answer backed by a sound, auditable model.

Turning Technical Excellence into Market Advantage

The true value of these principles is realized when they move from a checklist to an ingrained methodology. A well-constructed model is not a static report; it is a dynamic decision-making engine. It allows your institution to pivot with agility, whether that means stress-testing your loan portfolio against sudden interest rate hikes or evaluating the financial viability of a new branch expansion.

Consider a regional bank evaluating a small acquisition. A model built on these best practices would allow leadership to instantly toggle between best-case, base-case, and worst-case scenarios for deposit attrition post-merger. Instead of spending days rebuilding formulas, they can focus on the strategic implications of a 5% vs. a 15% attrition rate, making a faster, more informed capital allocation decision. This is where technical excellence translates directly into competitive advantage.

Key Takeaway: The goal of superior financial modeling is not just accuracy but strategic clarity. A well-designed model answers not only "what is the forecast?" but also "what if?" with reliability and immediacy, empowering leadership to act decisively.

Actionable Steps to Elevate Your Modeling Standards

Implementing these financial modeling best practices requires a deliberate, structured approach. It is a commitment to excellence that pays dividends in risk reduction and strategic insight.

Here are your immediate next steps:

- Conduct a Model Inventory and Audit: Begin by cataloging your institution's most critical models. Assess them against the eight principles discussed, identifying specific weaknesses, whether in documentation, formula consistency, or version control.

- Establish a Center of Excellence (CoE): Designate a team or individual responsible for setting and enforcing modeling standards across the organization. This group will own the templates, provide training, and serve as the governance